Data Mining and Visualisation

数据作业代写 Overal precision, recall, and f-score are the averages of the precision, recall, and f-score values of individual objects in ……

For each of the questions Q3,4,5,6 include: 数据作业代写

- one chart (with 3 sets of values: precision, recall, and f-score)

- table/list with the values used to build the chart

For Q7 include the answer.

Can we use some methods provided by numpy to do some basic calculation or comparison such as sum(), square(), min()... or have to implement them manually?

Yes, anything you can find in NumPy, feel free to use 数据作业代写

For the F score can we still use (2 precision*recall)/(precison+recall) using overall precision and recall or do we have to work out the F scores for each element?

yes, we first compute F-score for each element in the dataset and then average it to get the F-score for the entire dataset.

Are we allowed to drop the first column before we feed it into the algorithm function or should the function drop it for us?

yes, we should at some point ignore the first column as it is not numerical.

If we are implementing the k-means++ initial representative selection scheme, a) can we use this scheme to select initial representatives for k-medians? and b) if yes. Should we use manhattan distance to determine selection probabilities when initialising reps for k-medians?

This is a good question. 数据作业代写

I don't know about applications of the k-means++ rule to the k-medians algorithm.

I guess this is a natural thing to do, so feel free to do this. But make sure that you explain what you are doing in the comments.

We need to get an overall precision, recall, f-score for each k, is that right?

For example, the answer is going to like: when k = 1. The precision is xxx, the recall is xxx, and f-score is xxx

Yes, this is correct

I wonder how to calculate the overall precision, recall, f-score. Is it the average of the 4 values?

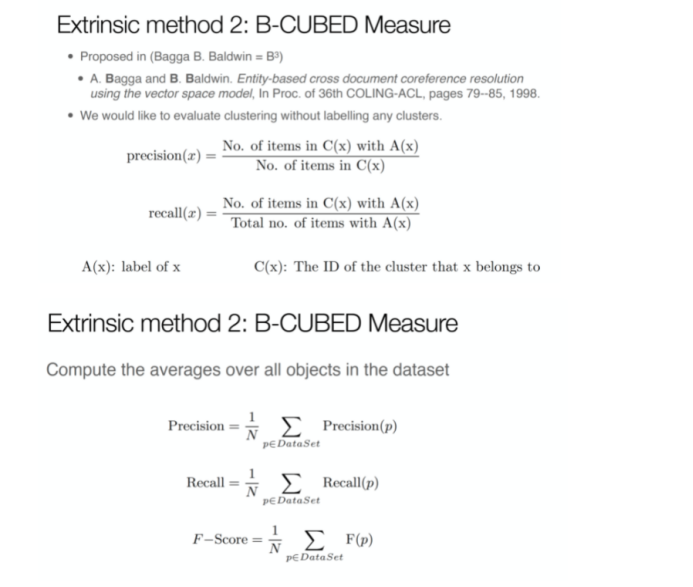

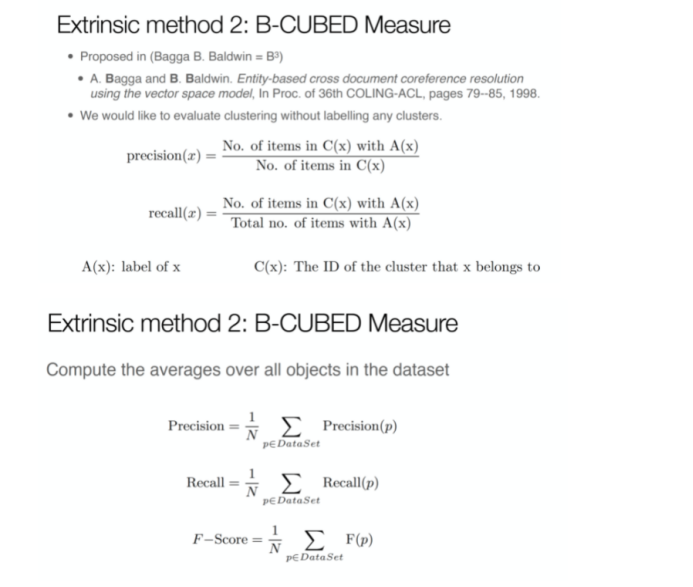

Overal precision, recall, and f-score are the averages of the precision, recall, and f-score values of individual objects in the dataset. The formula for this can be found in the slides. 数据作业代写

I was just wondering: is it desirable for our randomised centroids to be 'consistent' between different values of k?

In other words, if I initialise k=3 with three randomly chosen points, is it better for k=4 to consist of those same three randomly chosen points, plus an additional randomly chosen point? This way, k=5 will consist of the same points as k=4 plus an additional randomly chosen point (and so on).

I could edit my code such that the centroids initialised for different k values are consistent from one function call to another but bear no 'resemblance' to one another (in the manner suggested above); however, it seems to me that, if I were to do that, the performance of the algorithm (for different k values) would depend to a great extent on the arbitrariness of the initialisation of the centroids.

there is no requirement for the initial representatives to be consistent between different values of k.

Indeed, the result may greatly depend on the initial choice. You could play with different seed values and pick the one that gives you a better 'picture'.

Can I please know how to calculate the precision and recall? Are we supposed to initially categorise each label into 0,1,2,3 and then figure after calculation if all 0s are in one cluster, 2s are in another cluster and so on and so forth? And then compare all the cluster values to the initial label classification to check for TN, FN, TP, FP?

发表回复

要发表评论,您必须先登录。