Natural Language Processing Exam

自然语言处理代写 Key motivations to go from RNN-LM-based contextual embeddings (as in ELMo) to BERT: Why is bi-directionality desirable

Problem 1 Language Models (10 credits)

1.1 (0 P) Just to be sure: Write your first (given) name, your last (family) name, and your matriculation number (just as a sanity check).

1.2 (8 P) A person claims: „modern neural language models trained on large text corpora really are a manifestation of true artifical intelligence“. As an NLP expert, state two arguments supporting that claim and two arguments against that claim! Do NOT state MORE THAN TWO arguments each!!!

1.3 (2 P) Provide the mathematical expression for a a tri-gram approximation for P(w1w2w3w4w5)

Problem 2 Simple sentiment analysis with Naïve Bayes classifiers (10 credits)

2.1 (2 P) MAP solution for smoothing: why do we propose a Dirichlet prior p(θc |α) in the expression for the posterior p(θc |D)?

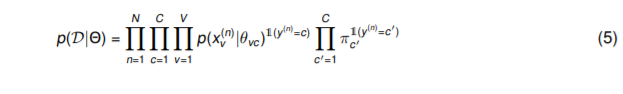

2.2 (2 P) While the simple MLE solution is![]() the MAP solution using a Dirichlet prior p(θc |α) is

the MAP solution using a Dirichlet prior p(θc |α) is![]() What are the values for αv that we have to choose to get the Lapalace smoothing and why?

What are the values for αv that we have to choose to get the Lapalace smoothing and why?

2.3 (1 P) Why is the Naive Assumption called "naive"?

2.5 (4 P) Using all of the modern arsenal of NLP: how would you solve the problem of sentiment classification of product reviews?

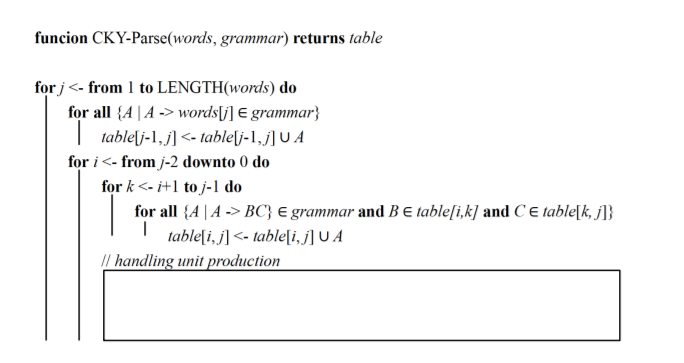

Problem 3 Constituency Parsing and Chunking (10 credits) 自然语言处理代写

3.1 (5 P) The CYK algorithm requires the grammar to be in CNF which requires eliminating unit productions (A → B) from the grammar. Augment the standard CYK algorithm so that it can also handle unit productions!

Problem 4

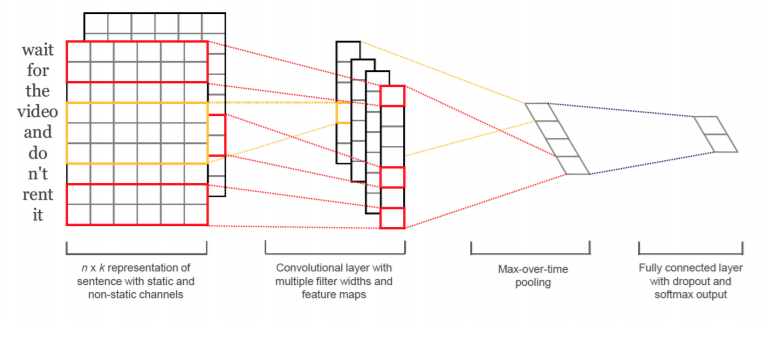

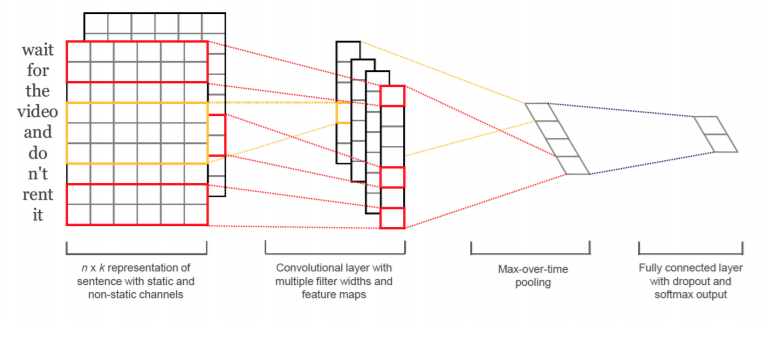

Convolutional NN for sentence classification tasks (paper Kim, Y. (2014). Convolu4 tional neural networks for sentence classification) (10 credits) Convolutional NN for sentence classification tasks (paper Kim, Y. (2014). Convolutional neural networks for sente

4.1 (5 P) Explain the motivation for using two channels in the input!

4.2 (5 P) What is the motivation for using several feature maps in the convolutional layer?

Problem 5 Modern contextual embeddings (10 credits) 自然语言处理代写

5.1 (2 P) You want to use pretrained embeddings for some NLP task. What is the technical difference in the data that you get and the way you use it, technically, when (a) downloading pretrained GloVe or (b) downloading pretrained BERT?

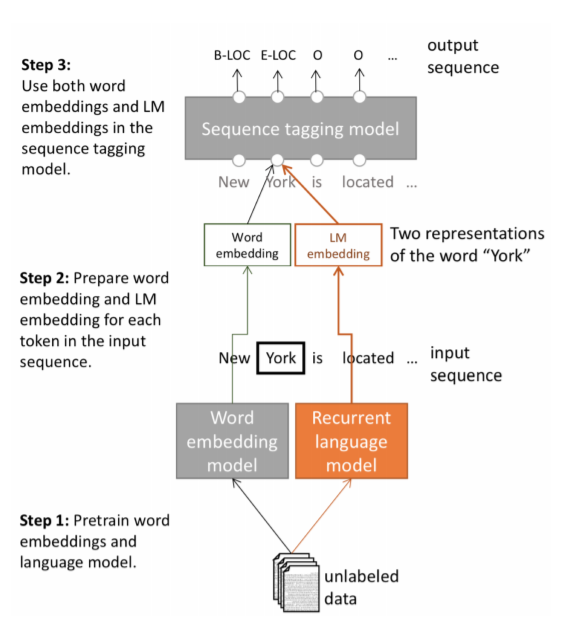

5.2 (2 P) Peters at al (2017) "Semi-supervised sequence tagging with bidirectional language models" (Tag-LM paper, "pre-ELMo") (see image 5.2): Motivate why they are using the "LM embeddings" from the "Recurrent language model"!

5.3 (2 P) Key motivations to go from RNN-LM-based contextual embeddings (as in ELMo) to BERT: Why is bi-directionality desirable for sentence-level tasks?

5.4 (2 P) Key motivations to go from RNN-LM-based contextual embeddings (as in ELMo) to BERT: Why is bi-directionality a problem in RNN-LM-based approaches? How do you address that in BERT?

5.5 (2 P) Key motivations to go from RNN-LM-based contextual embeddings (as in ELMo) to BERT: If you would want to stick to the RNN-LM idea for contextual embeddings, how could you cope with the bi-directionality problem?

Problem 6 Transformer models (Vaswani et al: Attention is All You Need (2017) (10 credits) 自然语言处理代写

6.1 (2 P) Additive attention may outperform dot-product attention for large dimension. What is a reason for that? How is the problem solved in the transformer model?

6.2 (2 P) The input width of a Transformer model is fixed. How do you handle shorter inputs?

6.3 (2 P) What is the motivation for the residual connections in the model?

6.4 (2 P) Why are trigonometric functions used for the positional encodings?

6.5 (2 P) How does the model ensure that each attention head attends in a different way?

Problem 7 Alice and Bob (10 credits) 自然语言处理代写

7.1 (5 P) Alice and Bob are active on social media and they’re friends on Facebook. Alice likes to write public text posts about various things on her Facebook account. She has been very active over the last years and has literally written thousands of posts. Bob likes anonymous type social media websites, where identities are not known. Recently he has stumbled upon an intriguing post there that he believes is written by Alice. Given only her Facebook text posts, how can Bob check his hypothesis with NLP?

7.2 (5 P) How can Alice use NLP techniques in order to solve the reverse problem and hide her identity from possible adversarial attacks like in part a)? More concretely, how can she construct a system that given her text post, the system would output another text post with the same meaning, but hides her identity and style of writing?

发表回复

要发表评论,您必须先登录。